5 ways to use advanced testing for better email results

I'm not exaggerating (much) when I say email testing is my life. Whenever I meet with a new email marketing team, one of my first questions is "What kind of email testing do you do?"

That's why I ran a poll on LinkedIn where I asked people, "What is your main goal when performing an A/B split test in email?"

Answers include "Uplift in results," "Gain insights on my audience," "Both uplifts and insights," and "To follow best practice." The winner? "Uplifts and insights" is the winning choice with 68% of respondents choosing it. Insights is a distant second, and nobody is doing it because it's a best practice.

Which is a huge relief because it means people are testing to optimize some part of their email program and not just because it's part of the workflow, somebody told them to do it, or their email platform makes it easy to test subject lines.

You might be wondering why I didn’t include the option to say "I don't test." First, I might find it too depressing to see that statistic. Second, I want to focus on the benefits of testing and find out more as to the marketer’s motivations for testing.

Why you should learn advanced email testing skills

If you don't test now, or if you test only easy, peripheral things like subject lines or calls to action, you're missing a world of helpful information that could guide fresh efforts to improve your email program.

Advanced testing skills are important to learn. Testing can reveal deep insights about your customers and what motivates them to act on your emails and stay engaged with your brand.

More to the point, testing can reveal whether you are investing in the right email strategies and tactics or you're wasting time and money on things that don't work.

Testing investments pay off

One thing I love about email is that you can test just about everything – the subject line (of course!), the offer, the audience and much more. Testing can help you find an immediate lift to improve the campaign you're focusing on now.

But advanced testing also can help you learn even more about your customers, both now and in the future. Do they respond better to emotional appeals, info-heavy content, fear of missing out (FOMO) or impulse shopping?

A single word tweak in a subject line, or testing a blue call-to-action button instead of a red one, won't be enough by itself to drive long-term insights about what motivates your customers. This is "ad hoc" testing, done on the fly, at random, with results that apply mainly to that specific campaign and not to your broader email program.

An approach that regularly tests broader concepts such as which approaches trigger actions, will be more revealing and useful. This is "holistic email testing," and I'll discuss it in more detail farther down in this post.

Investing in this phase of email marketing will pay off because this advanced testing will help you create messaging that's more finely tuned to all the variations in your audience. You'll avoid wasting time and budget on ineffective campaigns or expensive tactics that don't pan out.

5 common problems with email testing

Many of us learned how to test our email campaigns by using the real-time (10/10/80) testing features in our email marketing platforms. It's better than doing no testing at all. However, it lacks one important factor – a hypothesis to guide our decisions on what to test and what we think will happen.

These five problems often crop up for email teams that haven't set up strategic testing programs:

- No one on the team is well versed in data science or scientific testing processes.

- Management is unwilling to support the costs of testing and don’t fully understand the benefits.

- Teams don't like holding out audiences or doing the 50/50 split tests that are often needed to give the campaign time to convert, because of the potential loss of revenue from the audience that doesn't receive the email campaign, or receives the losing version.

- When a team does test email, it's often to seek only an immediate uplift instead of longer-term insights, and a hypothesis isn’t used.

- Marketers often choose the wrong success metric to measure results and inadvertently optimize for the wrong result.

Marketers can overcome these problems by raising the stakes a bit and approaching testing the way a scientist would.

Advanced testing uses scientific principles

Testing requires us to think like scientists as well as marketers. But if a science-based word like "hypothesis" gives you nightmares about your high school chemistry class, here's a refresher.

The hypothesis is your statement about what you think might happen when you bring a number of testing factors together. This is the basis of your test because it identifies what you'll test, how you'll measure results, what technology or channel you'll use to perform the test and what your expected outcome will be.

In a test we recently conducted for a client as part of our work building a browse-abandonment email automation program, we used this hypothesis, was based on a holistic approach that uses language and emotions to persuade a browser to act:

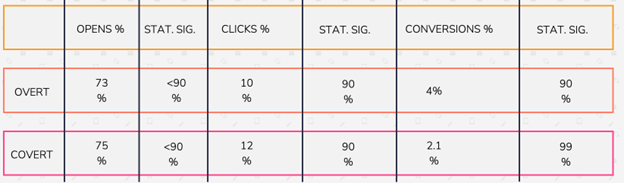

"A browse abandonment email with an overt request asking the customer to return to the website to purchase a browsed product will generate more orders than one using a covert approach that focuses on product benefits."

This hypothesis is an example of one you would use in advanced testing because it involves multiple elements (subject line, message copy, call to action and more) and aligns with a strategic objective (increasing orders) instead of driving temporary results, such as higher opens or clicks.

It's a cornerstone of holistic testing because it considers multiple factors that drive email success, not just a single element.

Here’s a sneak peek at the results we gained. Results like this from testing and applying the insights to other channels, have helped to contribute to a 26.4% increase in total revenue for our client, directly attributed to email marketing:

5 steps to improve results from email testing

These five measures will help you improve your program and make it more efficient and effective:

1. Set up a testing plan.

It all starts with your hypothesis, but it goes even farther because it lays out every aspect of your testing. It begins with your objective – an important step because it will guide every decision you make.

Other parts of a testing plan include how you'll measure statistical significance, your hypothesis, how long to run the test (if testing an automated program) or sample size to use (if testing a campaign), which factors you'll test, and the content you'll use from the subject line to the call to action.

2. Choose the right success metric.

I can't emphasize this too much. How you'll measure success is a key element of your hypothesis. Relying on the wrong success metric can mask poor results and mislead you into optimizing for the wrong metric. This wastes time and budget money in the short run and costs you major lost opportunities for revenue, audience engagement and business support in the long run.

The hypothesis I used as an example earlier measures success by the number of orders placed based on the email. This ties into our client's objective: to recover more potentially lost sales from browsers who leave their site without buying.

As you saw, we found the email version using covert language delivered more opens and clicks than the overt email. But it delivered 90% fewer orders than the overt version with 99% statistical significance. Had we gone with an easy-to-track metric like opens or clicks, our client would have lost sales and revenue.

3. Test beyond the subject line and call to action.

Subject-line testing gets all the attention in many basic A/B testing platforms, but that's where your email testing practice should begin, not end. Yes, it's important because you want to know what language compels more subscribers to open your email. But opens and clicks don't always correlate to business objectives, as my client's example demonstrates.

Ad hoc testing relies on simple tests like these, which pit two versions of an element against each other. The problem is that these single elements don't operate in a vacuum.

The answer is to adopt a holistic testing plan, which considers multiple factors. In our client's case, we included two versions of the subject line, image, copy, and call to action which supported the hypothesis, and then tested the two versions against each other.

This kind of test can give you deeper insights into what motivates your customers instead of a fleeting glance into something that works in the moment but not necessarily to subsequent campaigns.

4. Keep on testing.

One test doesn't tell you much. That's one of the problems with ad hoc testing. The winner of a subject line test in Campaign A might not – well, probably won't – predict success in Campaign B.

Testing delivers the most useful results when you do it continuously. Build it into your campaign workflow, and then apply what you learn throughout your email program, not just the next campaign.

Regular testing is a key component of holistic testing because the broader insights you achieve with it give you the most useful and accurate picture of your customers.

5. Roll out your testing results to the rest of your marketing team and throughout the company.

All too often, email gets sidelined on the marketing team as just the discount channel that delivers quick sales. But think about the people who open, click on and convert from your email campaigns. Those are your customers – your company's customers! When you test your emails, you're doing research on your target market for much less expense than engaging a research firm or driving unqualified unknowns to the site using PPC.

Take what you have learned about your customers using holistic testing and test to see if they apply to other marketing channels. And don't stop there! You can take all of this rich customer insight and share it throughout the company.

Overcome the challenges of testing

Most marketers' objections to testing center on lack of company support. Marketers say they don't have the testing knowledge, the access to an advanced testing platform, or the time and budget needed to set up and manage an ongoing testing program.

Others are worried about losing sales from customers who either don't see optimized versions of email campaigns or get excluded from all emails in an effort to measure the impact of email communications.

I understand these objections, but I also know the costs of not testing or of inaccurate or incomplete testing can outweigh any testing expense. When done right, testing is a revenue driver for the email channel, and should be considered an investment, not an expense.

By using a holistic methodology and sharing the insights with other channels, it becomes a revenue driver for the whole company with multiple channels benefiting from it.

Photo by Girl with red hat on Unsplash

Photo by Girl with red hat on Unsplash

How to resolve AdBlock issue?

How to resolve AdBlock issue?