Testing to Boost Performance: The 3 Biggest Mistakes Marketers Make and How to Address Them

It’s lovely to see more organizations consistently testing to boost performance! But it’s sad to see marketers doing tests which are returning inconclusive or just plain useless results. Here are three of the most common testing mistakes my team and I run across working with clients, along with tips for how you and your team can avoid making them in the future!

1. Setting the Stage for Muddled Results

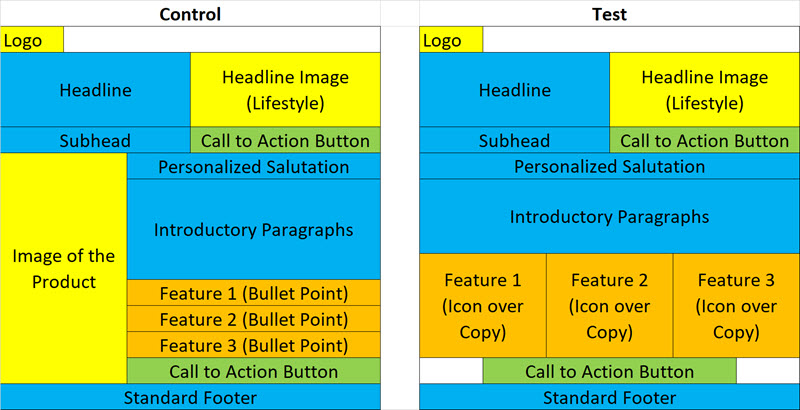

Here’s an actual case study – see if you can identify the ‘oops’ in the test that was destined to deliver muddled results. The goal of the email message is to get existing customers to sign-up for a new service that’s free.

Hypothesis: Using icons to illustrate the features will entice more people to convert and, as a result, boost conversions.

Creative Execution (Wireframes; features are in orange):

The test conversion rate lagged the control conversion rate by 7% -- but take a close look. Can we really say that using icons to illustrate the features depressed response?

Perhaps adding the icons had no impact and removing the image of the product depressed response for the test version.

Or perhaps adding the icons increased response but removing the product image decreased response by a greater amount, netting out at a loss for the test version.

This is a classic case of muddled results. The only way to get a read on whether using icons to illustrate the features will boost response is to keep everything else in the email the same. That means not removing the image of the product (or anything else); just adding in the feature icons and keeping everything else the same, as much as possible.

2. Testing Only a Single Execution of a Big Idea

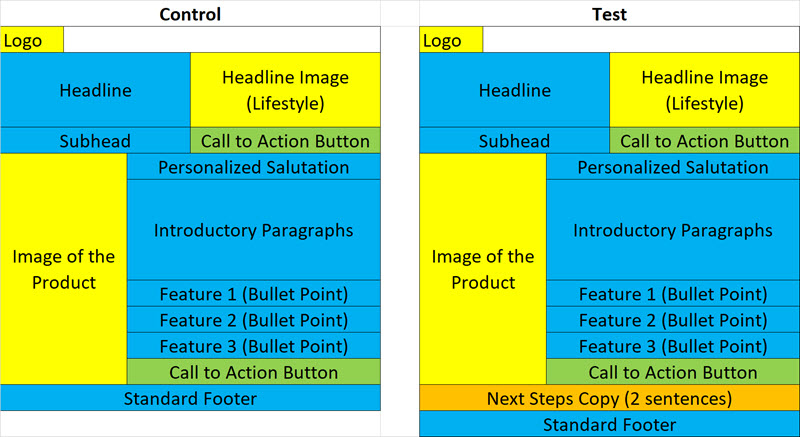

Once again, here’s another actual case study – see if you can spot the flaw in the test. Same organization as the last test, with the goal of getting existing customers to sign-up for a free service.

Hypothesis: Adding information on ‘next steps’ once someone clicks will motivate more of them to click, convert, and, as a result, boost your response.

Creative Execution (Wireframes; ‘next steps’ copy is in orange):

The test’s conversion rate lagged the control’s by 6% -- but once again, take a closer look. Can we really say that adding information on next steps didn’t boost response?

Here it’s not that the test results are muddled – it’s that only one execution of the hypothesis was tested. If this hypothesis was tested in a different way, it might boost response, for instance…

Placement

Look at the placement of the copy. It’s just above the footer – it’s below the final call to action button in the email. It could easily be missed. This same copy might have boosted response it if was placed higher up, say above the final call to action button or maybe even higher. It’s not a lot of copy (just 2 short sentences), so it would not be difficult to fit it in.

Format

Right now, ‘next steps’ is two sentences of copy illustrating 3 steps. What about riffing off the first example and using icons to illustrate these next steps. You could either use ‘1,2,3’ icons to number the steps or use icons that illustrate the steps, with copy underneath (as in the above example for features).

If you truly believe that adding next steps will boost response (and, by the way, if you don’t believe this you probably shouldn’t be testing it), the best way to test it is to go all out. Think of all the ways you could add this to the email – then choose the 3 to 5 ideas that you believe have the best chance of success and test all of those, head to head against each other and the control (which doesn’t have any ‘next steps’ language).

3. Choosing the Wrong Key Performance Indicator (KPI)

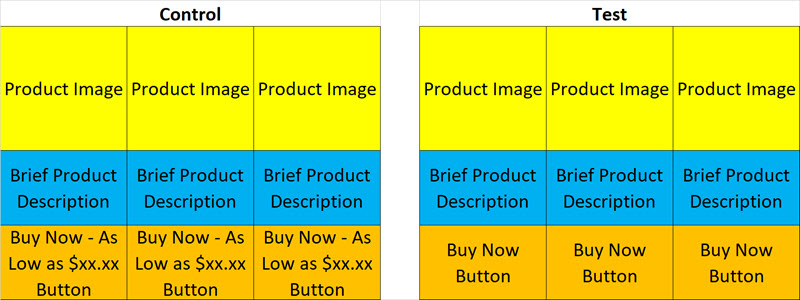

One final in-real-life case study – see if you can find the flaw. Here the email’s goal is to sell products to new and existing customers.

Hypothesis: Removing prices from the email message will cause more people to click-through and, as a result, boost revenue.

Creative Execution (wireframe for just part of the body of this catalog-style email); buttons with pricing are in orange):

The test beat the control soundly with a 35% higher click-through rate (CTR). But think this through – is CTR really the right KPI for this test?

KPIs should always be as directly related as possible to your bottom line. In this instance, the goal is to sell products. So good KPIs would be return-on-investment (ROI), return-on-ad-spend (ROAS), revenue-per-email (RPE) or just about anything related to revenue.

It’s especially important to have the proper KPI when you’re testing something like whether to include pricing, because pricing is a direct barrier to sale.

There are likely people who clicked through on the email without prices just to learn what the prices were – making them less qualified prospects than people who clicked through on the email with prices, since they already knew the cost and were farther down the sales funnel.

The way to remedy this mistake is to back and tally the revenue generated from each email – the winner should be the email creative that drove a higher ROI, ROAS or RPE – not the one with the higher CTR. The way to avoid it in the future is always to use a business metric (ROI, ROAS, RPE, conversion rate, etc.) as a KPI.

Testing is the best way to boost bottom line performance! But be sure that you are executing your tests to get solid results that will drive your email marketing program forward. Do try this with your email marketing team – and let me know how it goes!

How to resolve AdBlock issue?

How to resolve AdBlock issue?